Sketching is a relatively recent development in computer science and in the theoretical literature is often referred to as a class of Streaming Algorithms1, Sketches implement algorithms that can extract information from a stream of data in a single pass, which is also known as “one-touch” processing. Some sketches can be deterministic, although most sketches are probabilistic in their behavior and take advantage of various randomization techniques.

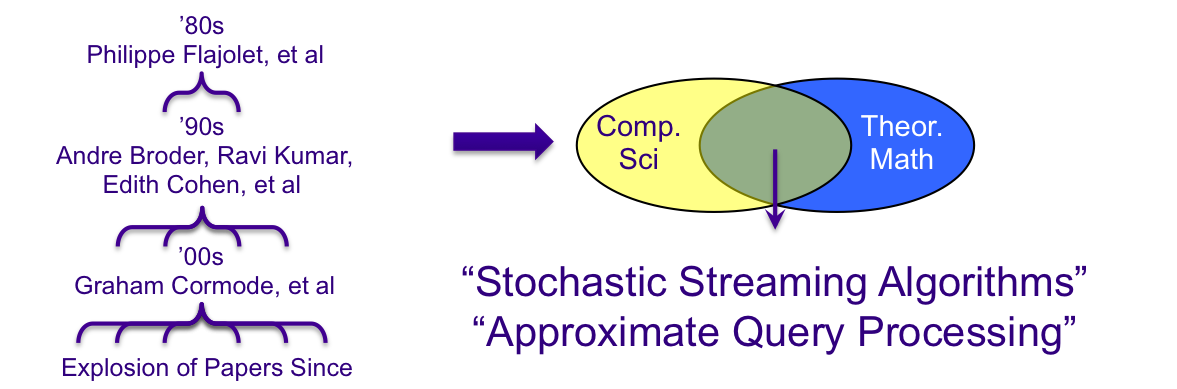

Sketching is a synergistic blend of theoretical mathematics, statistics and computer science, refers to a broad range of algorithms, and has experienced a great deal of interest and growth since the mid 1990’s coinciding with the growth of the Internet and the need to process and analyze Big Data. The term sketch, with its allusion to an artist’s sketch, has become the popular term to describe these algorithms and associated data structures that implement the theory.

The French mathematician Philippe Flajolet is often regarded as the father of sketching with his research in analytic combinatorics and analysis of algorithms. His 1985 paper Probabilistic counting Algorithms for Data Base Applications co-authored with G. Nigel Martin is one of the earliest papers that outlines the sketching concepts. The recent book, Synopses for Massive Data: Samples, Histograms, Wavelets, Sketches by Graham Cormode, et al, is an excellent review of this field.

1Also known as “Approximate Query Processing”, see Sketch Techniques for Approximate Query Processing